An overly simplified introduction to Google Deployment Manager. Part 2: Reusable Templates

Recap

This post is the second part of the Google Deployment Manager (GDM) series. For a simplified overview and evolution of operations followed by an introduction to GDM, check out the first part of the series here. For the sake of continuity, I will summarize the most critical concepts from part 1 of the series:

You may represent any infrastructure in GCP as a yaml configuration. If you had worked on Kubernetes, you would feel right at home with this. Logically it makes sense as Kubernetes itself is a slightly modified version of Google Cloud Platform. The configuration file representing any GCP resource takes the following form:

name: <<name of the GCP resource>>

type: type

properties:

<<properties from api documentation for the respective type>>Let’s build on this from here on.

Reusable Templates

Configuration files are some improvement over the days of ClickOps, but config files are rigid and have limited reusability. It becomes unmanageable quickly. Google recommends using templates to go around this issue. A template is a file written either in Python or jinja. You define your payload in the template file and reference it in the config file. Please note that it is possible to define all your payloads in a single template file. But for the sanity of the future maintainer (who may or may not be you) and better reusability, and maintainability, it is better to define a template per resource.

The Task

Let’s assumed that we are given a project in GCP with all relevant privileges; the task is to create:

- A network with one subnet.

- Firewall rule to allow ssh from anywhere.

- A VM in the above network with apache webserver install and a public IP.

The Roadmap

We shall first deploy the above setup using a config file followed by using templates and config in conjunction. In the process, we shall compare both. We have already seen a glimpse of the “config way” in part 1. For the templates, we are going to use Python. As mentioned before, GDM supports both jinja and Python. I chose Python because of my prior experience and overall popularity of the programming language. Also, Python is way more flexible and dynamic in this context. As per GCP documentation, templates must meet these requirements:

- The template must be written in Python 3.x.

- The template must define a method called GenerateConfig(context) or generate_config(context).

- The context object contains metadata about the deployment and your environment, such as the deployment’s name, the current project, and so on.

- The method must return a Python dictionary.

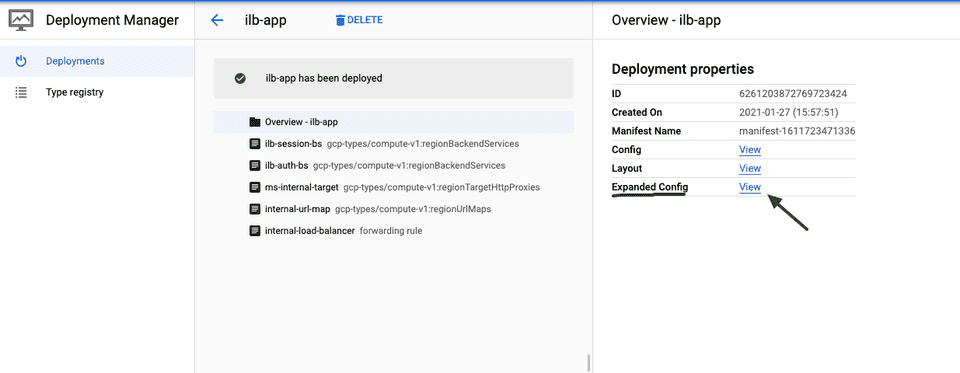

GDM parses the templates and config and expands it to a single config file before deployment. Looking a bit ahead, you can find the expanded config by going to your deployment in GCP console and selecting any deployment under Deployment Manager:

A side note on Python, especially if you are new to the world of Python, a basic understanding of Python is more than sufficient for any GDM IaC work. I’m in the process of building the IaC on GCP for a complex web application cluster, and I have not had to use anything beyond the very basics of Python and programming in general. I may write a separate post, “Python for GDM IaC”, keep an eye out for that!

The Config Way

We have already seen a basic config which was creating a VM here. Here we need to deploy three resources using one config, which is interdependent, i.e. both the firewall rule(s) and the VM need to wait for the network to be created first. We need to introduce two new concepts to achieve this.

ref

It lets you to point to another resource:

networkInterfaces:

- network: $(ref.test-network.selfLink)Where test-network is the network defined in the same config file. We will use this in the current and the next section.

dependsOn

It helps to create explicit dependencies.

resources:

- name: a-vm

type: compute.v1.instances

properties:

...

metadata:

dependsOn:

- persistent-disk-a

- persistent-disk-b

- name: persistent-disk-a

type: compute.v1.disks

properties:

...

- name: persistent-disk-b

type: compute.v1.disks

properties:

...Basically this will tell GDM to wait for persistent-disk-a and persistent-disk-b before creating a-vm.

The Config File

With the above information in mind, here is the config to achieve the cluster described in the roadmap:

small_cluster_config.yaml

resources:

# firewall rule

- name: allow-ssh

type: compute.v1.firewall

properties:

allowed:

- IPProtocol: tcp

ports:

- '22'

description: ssh firewall enable from everywhere

direction: INGRESS

network: $(ref.network-10-70-16.selfLink)

priority: 1000

sourceRanges:

- 0.0.0.0/0

metadata:

dependsOn:

- network-10-70-16

# network and sub-networks

- name: network-10-70-16

type: compute.v1.network

properties:

autoCreateSubnetworks: false

name: network-10-70-16

- metadata:

dependsOn:

- network-10-70-16

name: net-10-70-16-subnet-australia-southeast1-10-70-10-0-24

type: compute.v1.subnetwork

properties:

description: Subnetwork of network-10-70-16 in australia-southeast1 created by GDM

ipCidrRange: 10.70.10.0/24

name: net-10-70-16-subnet-australia-southeast1-10-70-10-0-24

network: $(ref.network-10-70-16.selfLink)

region: australia-southeast1

# vm

- name: vm-one

type: compute.v1.instance

properties:

zone: australia-southeast1-b

machineType: https://www.googleapis.com/compute/v1/projects/playground-sukanta/zones/australia-southeast1-b/machineTypes/n1-standard-1

disks:

- deviceName: boot-disk-vm-one

boot: true

initializeParams:

diskName: boot-disk-vm-one

diskSizeGb: 20

sourceImage: https://www.googleapis.com/compute/v1/projects/centos-cloud/global/images/family/centos-8

type: PERSISTENT

networkInterfaces:

- accessConfigs:

- name: public-ip-for-vm

type: ONE_TO_ONE_NAT

network: $(ref.network-10-70-16.selfLink)

subnetwork: $(ref.net-10-70-16-subnet-australia-southeast1-10-70-10-0-24.selfLink)

metadata:

dependsOn:

- network-10-70-16Please save this as small_cluster_only_config.yaml and run the below command. Of course, change the project name below and any network and subnetwork related parameters if it clashes with any existing resource. The expected output is also shown below.

>> gcloud deployment-manager deployments create small-cluster --config=small_cluster_only_config.yaml --project=my-playground

The fingerprint of the deployment is b'J2t87BWs2CpBngy85879jfka0t78gdjk1Q=='

Waiting for create [operation-1610258040803-5b8887c6674e3-36607482-8b207l01]...done.

Create operation operation-1610258340803-5b8857c6674e3-36608682-8b227e01 completed successfully.

NAME TYPE STATE ERRORS INTENT

allow-ssh compute.v1.firewall COMPLETED []

network-10-70-16 compute.v1.network COMPLETED []

net-10-70-16-subnet-australia-southeast1-10-70-10-0-24 compute.v1.subnetwork COMPLETED []

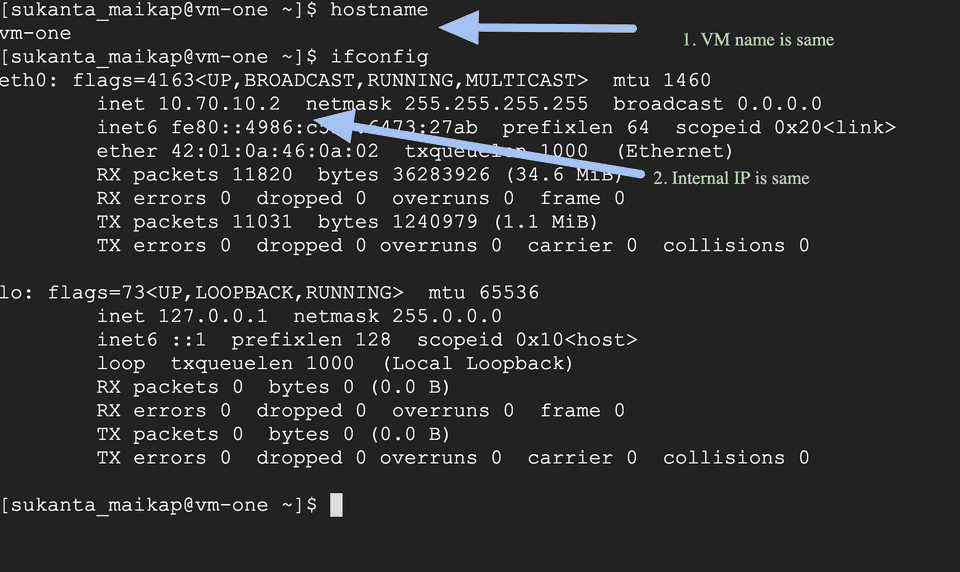

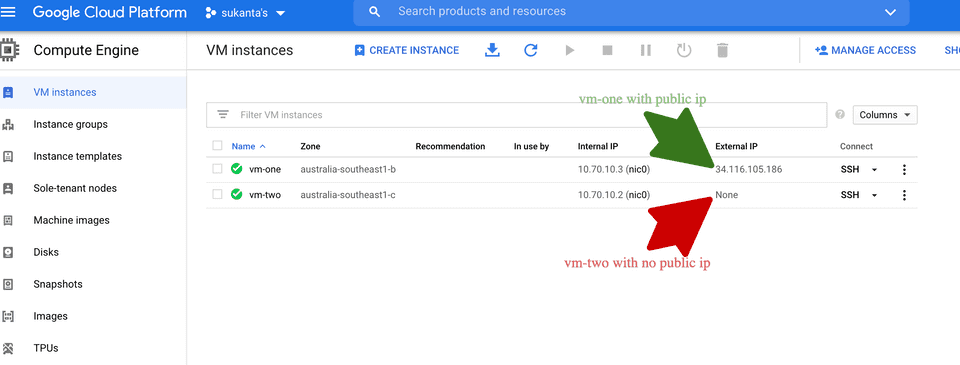

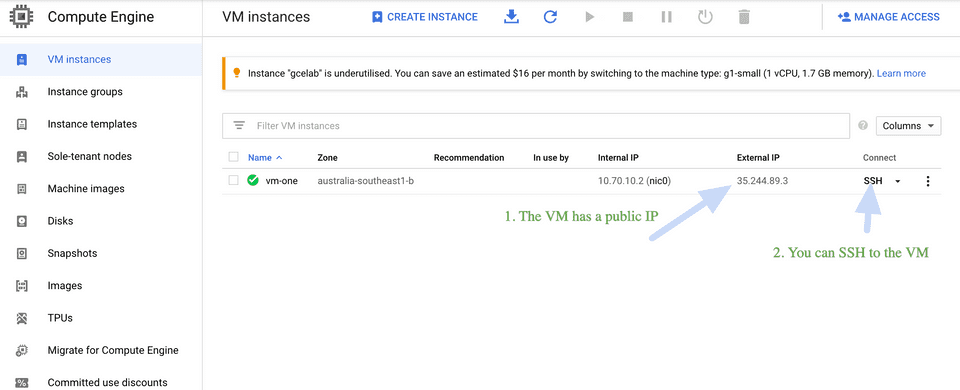

vm-one compute.v1.instance COMPLETED []Please inspect all the resources created above by going to GCP console. Also make sure the VM has a public IP and you can ssh to the VM. Below screenshots are for reference:

Go to Compute Engine -> VM Instances.

Now, imagine you have to create 10 VMs instead of 1. In the current format, you need to repeat the VM block above ten times. GDM’s solution for going around this is to use a template.

Please delete the above cluster before proceeding any further. We will create the same resources below, and the future operations may fail or act weird unless you delete the current resources.

➜ template gcloud deployment-manager deployments delete small-cluster --project=my-playground

The following deployments will be deleted:

- small-cluster

Do you want to continue (y/N)? y

Waiting for delete [operation-1611400906213-5b98f829b078e-bfbbc4cd-63126216]...done.

Delete operation operation-1611400906213-5b98f829b078e-bfbbc4cd-63126216 completed successfully.REST Resources

Recall from Part 1 of this series, while using GDM, all you are doing is, supplying payload to a REST server. For the list of fields you can use in the payload, have a look at the below resources:

The Template Way

The basic idea is to have the reusable part of the payload in a template file. The accompanying config file acts as an input for the template(s). In this format, the yaml file acts more like an orchestrator. GDM figures out the templates to construct the final payload by reading the yaml file. We need to reference the same API documentation mentioned here to construct the payload. At first, some or most of it may not make sense. But read on, I promise by the end of this post, it will. Let’s redo the same setup using a template (with a slight twist). This time, we will create 2 VMs instead of 1 to demonstrate the reusability aspect of templates.

We need to get used to 2 more concepts (along with reference and dependsOn before we proceed any further. This one is very much related to ref, & dependsOn:

env

For each deployment, GDM creates pre-defined environment variables that contain information about the deployment. These variables can be accessed from the python template as: contenxt.env['name_of_the_variable']. Please check out the complete list of environment variables here.

outputs

In case of a reference mentioned in a template file (in our case, a Python file), it is evaluated after the template is processed and GDM creates the expanded config file. So, mentioning a reference in the template file won’t expand to anything, and the deployment would fail. To solve this, we need to expose the values we would like to reference (in template files or in accompanying config file) as an output. In the Python template, passing all values as a dictionary, with outputs as its key. Output values can be:

- A static string

- A reference to a property

- A template property

- An environment variable

We extensively use this below when creating the network and subnetwork, as all other resources reference this resource as part of this deployment.

return {

'resources': resources,

'outputs': [

{

'name': 'network',

'value': network_self_link

},

{

'name': 'subnetworks',

'value': subnets_self_link

}

]

}A detailed explanation is provided in connect the dots section below.

Let’s now try to deploy the cluster with the above information in mind. I mention the file name above the code for each section below.

The Network(s) & Subnetwork(s)

I’m taking care of both network and subnetwork in a single template file. Feel free to split these up if you want to. I added enough comments in the code for reference.

vpc_network.py

import re

"""

Creates a network and its subnetworks in a project.

"""

def deduce_network_name(base):

"""

Give a more meaningful network name & convert the string to valid resource name format

"""

return re.sub(r'\W+', '-', base).lower()

def deduce_subnet_name(base, region, subnet_cidr):

"""

Deduce subnet name from parameters & convert the string to valid resource name format

"""

base = re.sub(r'\W+', '-', base).lower()

subnet_cidr = re.sub(r'\W+', '-', subnet_cidr)

effective_subnet_name = base + "-subnet-" + region + "-" + subnet_cidr

return effective_subnet_name.lower()

def GenerateConfig(context):

"""

Generates config is the entry point. GDM calls this method to consturct the payload per template.

You must have this method present in each template file.

GDM takse care of converting the accompanying config file into a context object per resource. context.env

is a dictionary which contains all the params you mention above the resources section per resource.

And context.properties is one more dictionary which contains all the properties mentioned under the resources section.

"""

given_name = context.env['name']

effective_vpc_name = deduce_network_name(given_name)

# for output

network_self_link = '$(ref.%s.selfLink)' % effective_vpc_name

resources = [{

'name': effective_vpc_name,

'type': 'compute.v1.network',

'properties': {

'name': effective_vpc_name,

'autoCreateSubnetworks': False,

}

}]

# for output

subnets_self_link = []

# You can have one or more subnets associated with a network

for subnetwork in context.properties['subnetworks']:

subnet_name = deduce_subnet_name(given_name, subnetwork['region'], subnetwork['cidr'])

subnet = {

'name': subnet_name,

'type': 'compute.v1.subnetwork',

'properties': {

'name': subnet_name,

'description': 'Subnetwork of %s in %s created by GDM' % (effective_vpc_name, subnetwork['region']),

'ipCidrRange': subnetwork['cidr'],

'region': subnetwork['region'],

'network': '$(ref.%s.selfLink)' % effective_vpc_name,

},

'metadata': {

'dependsOn': [

effective_vpc_name,

]

}

}

resources.append(subnet)

subnets_self_link.append('$(ref.%s.selfLink)' % subnet_name)

# Expects the return object to be a dictionary of directories with keys resources and outputs

# and value for be anothe dictionary containing the final payload

return {

'resources': resources,

'outputs': [

{

'name': 'network',

'value': network_self_link

},

{

'name': 'subnetworks',

'value': subnets_self_link

}

]

}The Firewall Rule(s)

firewall_rule.py

import re

"""

Creates firewall rules in a project.

"""

def deduce_firewall_name(name):

"""deduce firewall name from the supplied name"""

return re.sub(r'\W+', '-', name).lower()

def CreateRules(context):

"""

Take a list of Firewall Rule Properties in the context

Build a list of Firewall rule Dicts

Return list

"""

Firewall_Rules = []

"""

Loop through many defined firewalls and build a Dict to be append to the list

"""

for rule in context.properties['rules']:

given_name = rule['name']

rule_name = deduce_firewall_name(given_name)

rule_description = rule['description']

# Start of our firewall list.

Firewall_Rule = {

'name': rule_name,

'type': 'compute.v1.firewall'

}

rule_ipProtocol = rule['ipProtocol']

rule_ipPorts = rule['ipPorts']

rule_action = rule['action']

rule_direction = rule['direction']

rule_network = rule['network']

rule_priority = rule['priority']

#Build the Properties Key for this firewall rule Dict.

properties = {}

if 'sourceRanges' in rule:

rule_SourceRanges = rule['sourceRanges']

properties['sourceRanges'] = rule_SourceRanges

properties['priority'] = rule_priority

properties['direction'] = rule_direction

properties['description'] = rule_description

properties['network'] = rule_network

if rule_action == 'allow':

allowed = [{

'IPProtocol': rule_ipProtocol,

'ports': rule_ipPorts

}]

properties['allowed'] = allowed

elif rule_action == 'deny':

denied = [{

'IPProtocol': rule_ipProtocol,

'ports': rule_ipPorts

}]

properties['denied'] = denied

Firewall_Rule['properties'] = properties

Firewall_Rules.append(Firewall_Rule)

return Firewall_Rules

def GenerateConfig(context):

resources = CreateRules(context)

return {'resources': resources}

VM(s)

vm.py

import re

"""

Create VM with a boot disk.

"""

_COMPUTE_URL_BASE = 'https://www.googleapis.com/compute/v1/'

def deduce_name(base):

"""

Helper function to translate vm name to a valid string from user input.

"""

return re.sub(r'\W+', '-', base).lower()

def GenerateConfig(context):

"""

Generates config is the entry point. GDM calls this method to consturct the payload per template.

You must have this method present in each template file.

Re

"""

vm_name = deduce_name(context.env['name'])

resources = [

{

# payload type

'type': 'compute.v1.instance',

'name': vm_name,

'properties': {

'zone': context.properties['zone'],

'machineType': ''.join([_COMPUTE_URL_BASE, 'projects/',

context.env['project'], '/zones/',

context.properties['zone'],

'/machineTypes/', context.properties['machineType']]),

'metadata': {

'items': []

},

'disks': [

{

'deviceName': 'bootdisk' + vm_name,

'type': 'PERSISTENT',

'boot': True,

'autoDelete': True,

'initializeParams': {

'diskName': 'boot-disk-' + vm_name,

'diskSizeGb': 20,

# BaseImage. Check the full list of base images here: https://cloud.google.com/compute/docs/images/os-details

'sourceImage': ''.join([_COMPUTE_URL_BASE, 'projects/',

'centos-cloud/global/',

'images/family/centos-8'])

}

}

],

'networkInterfaces': []

}

}

]

# Attach at least 1 network interface to a VM

for networkInterface in context.properties['networkInterfaces']:

network_interface = {}

network_interface['network'] = networkInterface['network']

network_interface['subnetwork'] = networkInterface['subnetwork']

# Below fields are not mandetory, so we need to check if this field is present in the config file.

if 'networkIP' in networkInterface:

network_interface['networkIP'] = networkInterface['networkIP']

# without accessConfig, the VM won't have a public IP

if 'accessConfigs' in networkInterface:

access_configs = []

for accessConfig in networkInterface['accessConfigs']:

access_config = {}

access_config['name'] = deduce_name(accessConfig['name'])

access_config['type'] = 'ONE_TO_ONE_NAT'

# for this to work, a public IP has to preserved first

if 'natIP' in accessConfig:

access_config['natIP'] = accessConfig['natIP']

access_configs.append(access_config)

network_interface['accessConfigs'] = access_configs

resources[0]['properties']['networkInterfaces'].append(network_interface)

return {'resources': resources}

The Accompanying Config File

small_cluster.yaml

imports:

- path: vpc_network.py

name: vpc_network.py

- path: firewall_rule.py

name: firewall_rule.py

- path: vm.py

name: vm.py

resources:

- name: firewall-rules-for-deployment-1

type: firewall_rule.py

properties:

rules:

- name: allow ssh

description: "tcp firewall enable from all"

network: $(ref.net-10-70-16.network)

priority: 1000

action: "allow"

direction: "INGRESS"

sourceRanges: ['0.0.0.0/0']

ipProtocol: "tcp"

ipPorts: ["22"]

- name: net-10-70-16

type: vpc_network.py

properties:

subnetworks:

- region: australia-southeast1

cidr: 10.70.10.0/24

- name: vm one

type: vm.py

properties:

zone: australia-southeast1-b

machineType: n1-standard-1

networkInterfaces:

- network: $(ref.net-10-70-16.network)

subnetwork: $(ref.net-10-70-16.subnetworks[0])

accessConfigs:

- name: public ip for vm

- name: vm two

type: vm.py

properties:

zone: australia-southeast1-c

machineType: n1-standard-1

networkInterfaces:

- network: $(ref.net-10-70-16.network)

subnetwork: $(ref.net-10-70-16.subnetworks[0])The Overall Structure

Save all the above files in a flat format. The root of the project should have all the files, as shown below:

-/(project_folder)

|

|-- vpc_network.py

|-- firewall_rule.py

|-- vm.py

|-- small_cluster.yamlDeployment

Run the below from the root of the project. The expected output is also shown below:

>> gcloud deployment-manager deployments create small-cluster --config=small_cluster.yaml --project=my-playground

The fingerprint of the deployment is b'6wS-l3mbwxzyN1xxjoH2Rg=='

Waiting for create [operation-1611397899640-5b98ecf665d33-caa63588-3eb93aea]...done.

Create operation operation-1611397899640-5b98ecf665d33-caa63588-3eb93aea completed successfully.

NAME TYPE STATE ERRORS INTENT

allow-ssh compute.v1.firewall COMPLETED []

net-10-70-16 compute.v1.network COMPLETED []

net-10-70-16-subnet-australia-southeast1-10-70-10-0-24 compute.v1.subnetwork COMPLETED []

vm-one compute.v1.instance COMPLETED []

vm-two compute.v1.instance COMPLETED []Follow the steps mentioned here to validate the deployment. This cluster should have one more VM named vm-two with no public IP, as for vm-two accessConfigs is not present in the config file.

Connect The Dots

Let’s break it down here on. When I started working on Google Deployment Manager, I struggled with many implicit/tacit knowledge that Google’s documentation is based on. My aim here is to break it down further, so here it goes. I’ll do this in a Q&A format. Feel free to suggest items to cover; I would be more than happy to add them here.

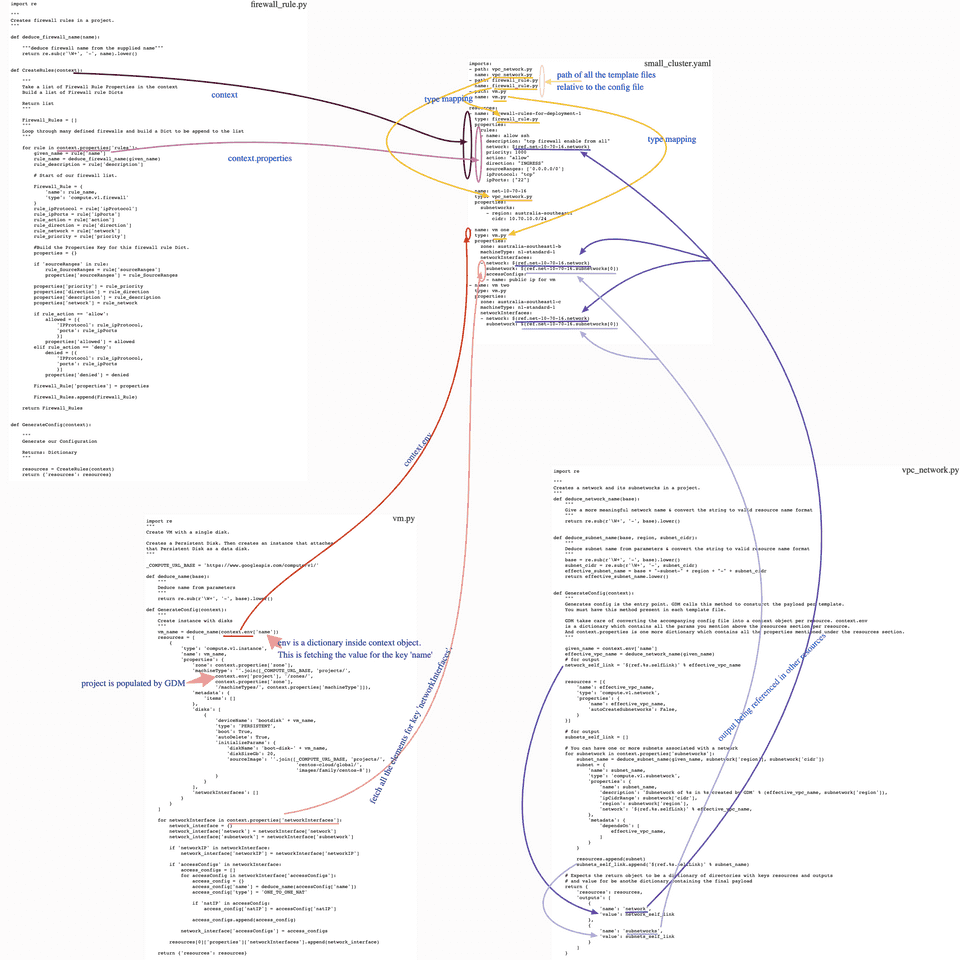

- How Deployment Manager figures out which template(s) to use?

Please recall that you pass only the config file (yaml) to gcloud deployment-manager deployments create command. You import the template(s) at the top of the config file as types and reference the types against each resource. Let’s trace this in our current example:

small_cluster.yaml

imports:

- path: vpc_network.py

name: vpc_network.py

- path: firewall_rule.py

name: firewall_rule.py

- path: vm.py

name: vm.py

resources:

- name: firewall-rules-for-deployment-1

type: firewall_rule.py

properties:

.

.

.

- name: net-10-70-16

type: vpc_network.py

properties:

.

.

.

- name: vm two

type: vm.py

properties:Notice the type field against each resource (VM, network or firewall rule) references the import name above. Deployment Manager follows this exact path to determine the templates to use.

- What is context in the template file?

Please recall that the Deployment Manager expects each template to define a method called GenerateConfig(context) or generate_config(context). Deployment Manager calls this method and passes the context object. The context object consists of two python directories, env containing the environment variables and properties is the python dictionary representation of the respective config.

I translated these very concepts in graphical format. I hope this helps:

The Code

You can find all the code used in this post in GitHub.

What is next?

There still are a few more advanced topics to cover regarding GDM. Please head over to Part 3 of this series here.