Deleting old artifacts in Sonatype Nexus

- Read this first

- What’s at stake?

- Cleanup Policies

- The Shortcoming

- Groovy script to your rescue

- Reference

Read this first

The below article is written for self-hosted Sonatype Nexus OSS version 3.14.0-14. The newer OSS versions have addressed a lot of the shortcomings depicted in this post. If you have the freedom to upgrade your Nexus instance, or even better, move to a paid version, do so!!!

What’s at stake?

If you are in any form responsible for managing a Sonatype Nexus instance, you are responsible for the lifeline of your company. Because without the repository manager, your development and release process comes to a standstill. One critical step in the maintenance of Nexus is to make sure the system (VM or VMs) is never running out of disk space (I’m talking about self-hosted Nexus instances). You can either add more disk space (after all, storage is cheap) each time your alerts scream about your repository manager running out of disk space. You may also clean up artifacts or images manually, which is painstakingly slow and tedious. The best option is just to let Nexus do it for you.

Cleanup Policies

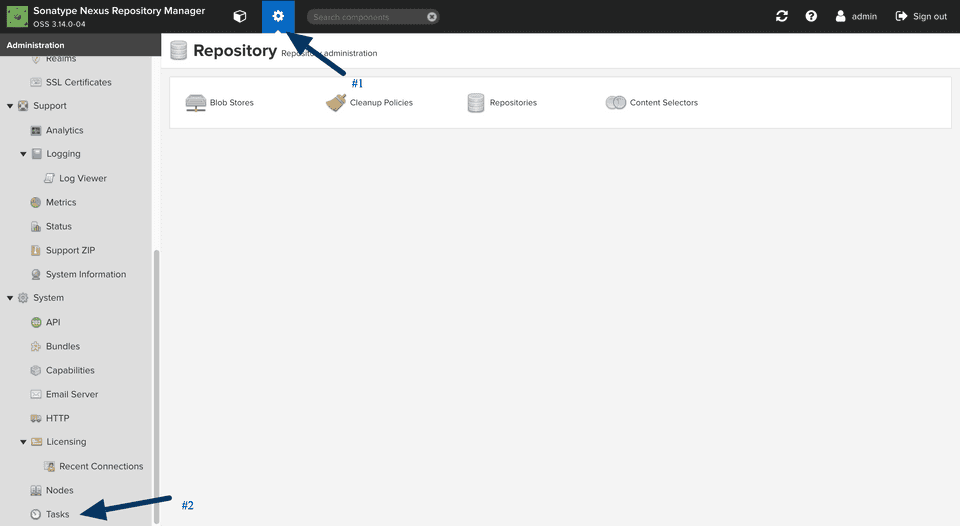

- Login to nexus as an admin user.

- Go to Server Administration and Configuration.

- Go to Repository >> Cleanup Policies.

- Click Create Cleanup Policy.

- Here, most of the things are self explanatory. I would request you to be cautious about a few things though:

- Choose your

Format,Published BeforeandLast Downloaded Beforewisely.

- Choose your

The Shortcoming

The cleanup policies lack a few proverbial teeth. For example, the cleanup policies do not allow you to set policy per component but per repository. This will be problematic if you have different release frequencies for each component. In my current workplace, we have a few components which have not been released for over a year. This means I have to set the cleanup policy for the whole repository to retain all artifacts as old as the least released component. Not ideal!!!

Groovy script to your rescue

Nexus allows you to define custom clean up policies via Tasks. You need to write a bit groovy, though. Here are the steps:

- Login to nexus as an admin user and go to settings.

- Go to

Tasksfrom the left panel.

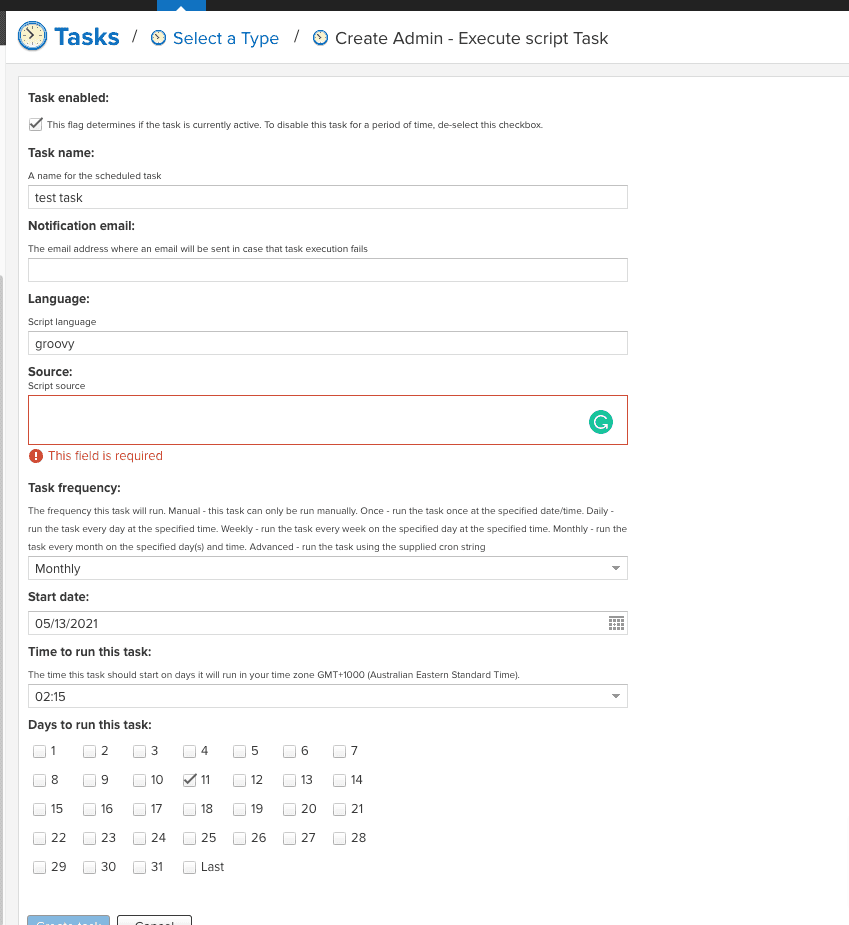

- Hit

Create Taskand chooseAdmin - Execute Script.

- The script screen is self-explanatory. In the below script (you need it here), I’m deleting two components named

au.com.43.shippingandau.com.42.rates, which are both undermaven-release. I want to delete all artifacts under these components, which are older than 30 days. I also want to make sure I always have at least 50 artifacts.

import org.sonatype.nexus.repository.storage.StorageFacet;

import org.sonatype.nexus.common.app.GlobalComponentLookupHelper

import org.sonatype.nexus.repository.maintenance.MaintenanceService

import org.sonatype.nexus.repository.storage.ComponentMaintenance

import org.sonatype.nexus.repository.storage.Query;

import org.sonatype.nexus.script.plugin.RepositoryApi

import org.sonatype.nexus.script.plugin.internal.provisioning.RepositoryApiImpl

import com.google.common.collect.ImmutableList

import org.joda.time.DateTime;

import org.slf4j.Logger

def retentionDays = 30;

def retentionCount = 50;

def repositoryName = 'maven-releases';

def targetlist =["au.com.42/shipping", "au.com.42/rates"].toArray();

log.info(":::Cleanup script started!");

MaintenanceService service = container.lookup("org.sonatype.nexus.repository.maintenance.MaintenanceService");

def repo = repository.repositoryManager.get(repositoryName);

def tx = repo.facet(StorageFacet.class).txSupplier().get();

def components = null;

try {

tx.begin();

components = tx.browseComponents(tx.findBucket(repo));

}catch(Exception e){

log.info("Error: "+e);

}finally{

if(tx!=null)

tx.close();

}

if(components != null) {

def retentionDate = DateTime.now().minusDays(retentionDays).dayOfMonth().roundFloorCopy();

int deletedComponentCount = 0;

int compCount = 0;

def listOfComponents = ImmutableList.copyOf(components);

def previousComp = listOfComponents.head().group() + listOfComponents.head().name();

listOfComponents.reverseEach{comp ->

log.info("Processing Component - group: ${comp.group()}, ${comp.name()}, version: ${comp.version()}");

if(targetlist.contains(comp.group()+"/"+comp.name())){

log.info("previous: ${previousComp}");

if(previousComp.equals(comp.group() + comp.name())) {

compCount++;

log.info("ComCount: ${compCount}, ReteCount: ${retentionCount}");

if (compCount > retentionCount) {

log.info("CompDate: ${comp.lastUpdated()} RetDate: ${retentionDate}");

if(comp.lastUpdated().isBefore(retentionDate)) {

log.info("compDate after retentionDate: ${comp.lastUpdated()} isAfter ${retentionDate}");

log.info("deleting ${comp.group()}, ${comp.name()}, version: ${comp.version()}");

service.deleteComponent(repo, comp);

log.info("component deleted");

deletedComponentCount++;

}

}

} else {

compCount = 1;

previousComp = comp.group() + comp.name();

}

}else{

log.info("Component skipped: ${comp.group()} ${comp.name()}");

}

}

log.info("Deleted Component count: ${deletedComponentCount}");

}Save this with a sensible schedule, and this will save you much time and sudden surprises in the future.